I’ve previously written about our development of Bloomreach Clarity, our conversational shopping agent, from how we found the right balance with search to how we’re powering the conversational experience.

While those posts highlighted how we approached early challenges and transformed product discovery, a lot of the information was shared at a high level. In this post, I want to dig deeper into our architecture and explain how we make conversational shopping work. Let’s explore the technologies that power Clarity.

Bridging User Queries With AI Knowledge

Let’s first take this approach from the end user perspective. When someone interacts with Clarity, what’s happening under the hood?

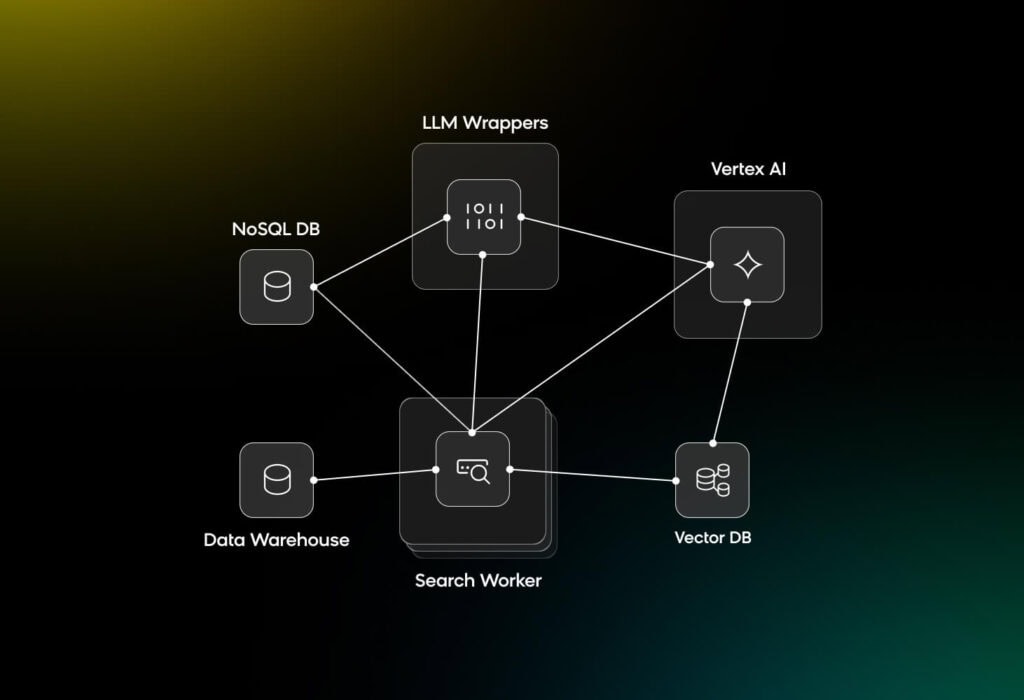

Our solution includes a plugin frontend called the Bloomreach Weblayer, short for web overlays. Our backend consists of an agentic framework that uses LLMs at the center — in our case, we use Vertex AI (more specifically, we use Gemini 2.0 Flash for the best balance of speed and costs).

These two layers work in conjunction to figure out the user’s intent based on their query. Is the user searching for a specific product in a specific category? Or are they using more generic terms?

For a generic query (e.g., “shirts”), a call is made to the Clarity search agent, which will determine if the search term is too broad. If it is, Clarity will go back to the user with follow-up questions (e.g., “What kind of shirts are you interested in?” or “Are there any specific brands you’d like to see?”). This will help turn the generic query into a more specific one.

When the query has enough specificity, such as “blue jeans under $200,” the model constructs a precise filter schema (e.g., price = <$200, color = blue). Then, the Vertex AI embedding model sends back a vector embedding for the product, which, when combined with the filters, ensures precision and relevance. All of these products are consumed from sources that are stored either in Bigtable, S3, Shopify, or any other passed in through our ingestion API, and then stored along with their vectors in Qdrant for the LLM to pull from.

Generating Relevant Questions for Product Discovery

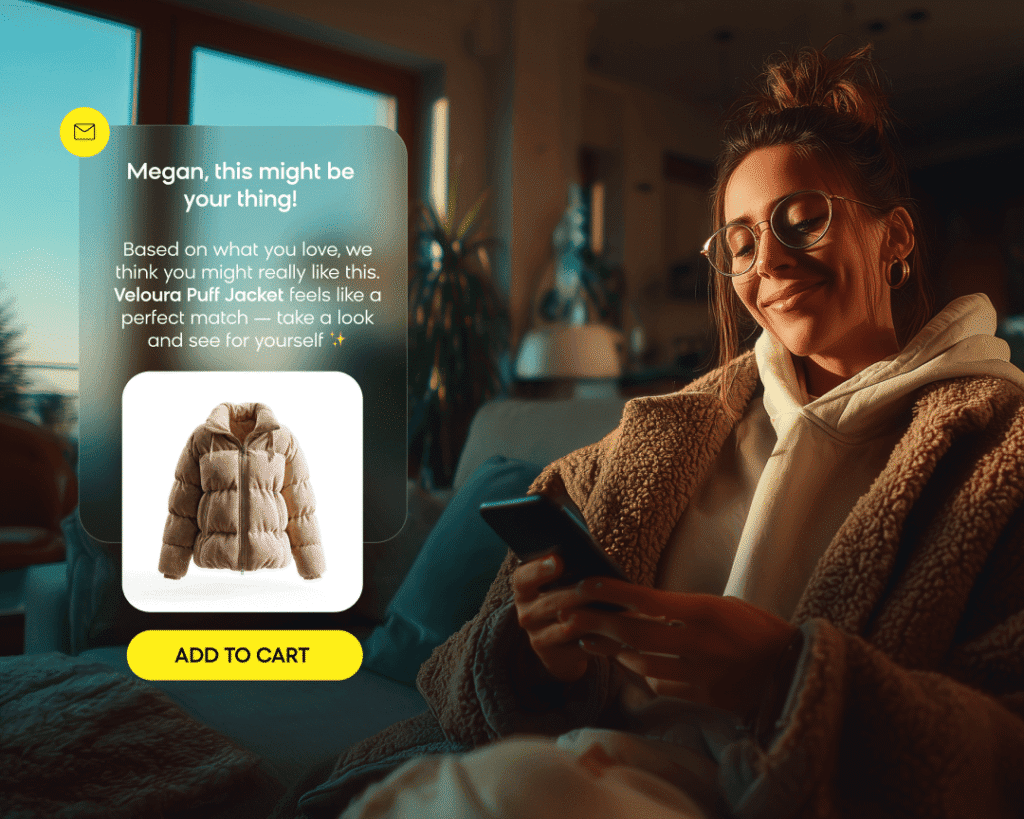

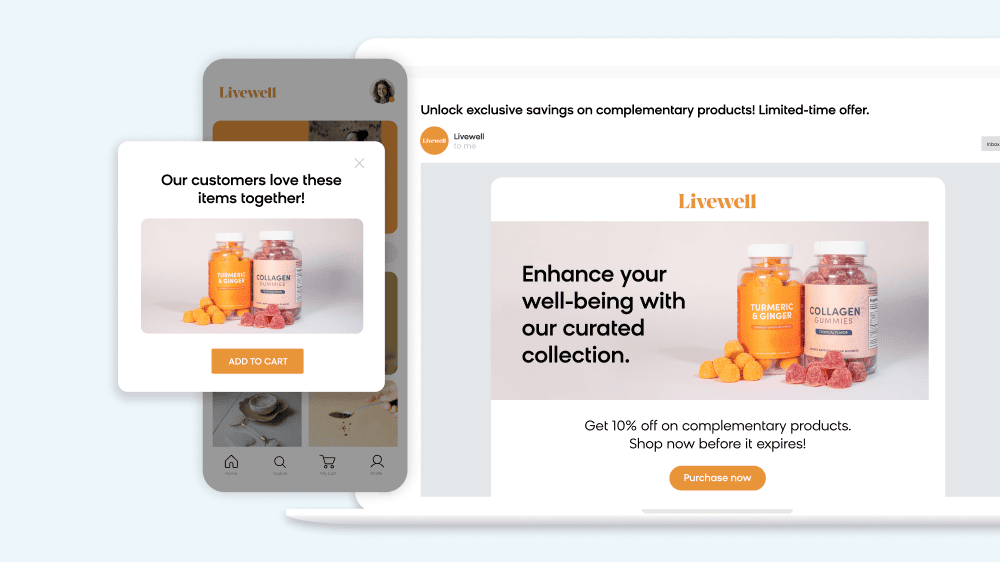

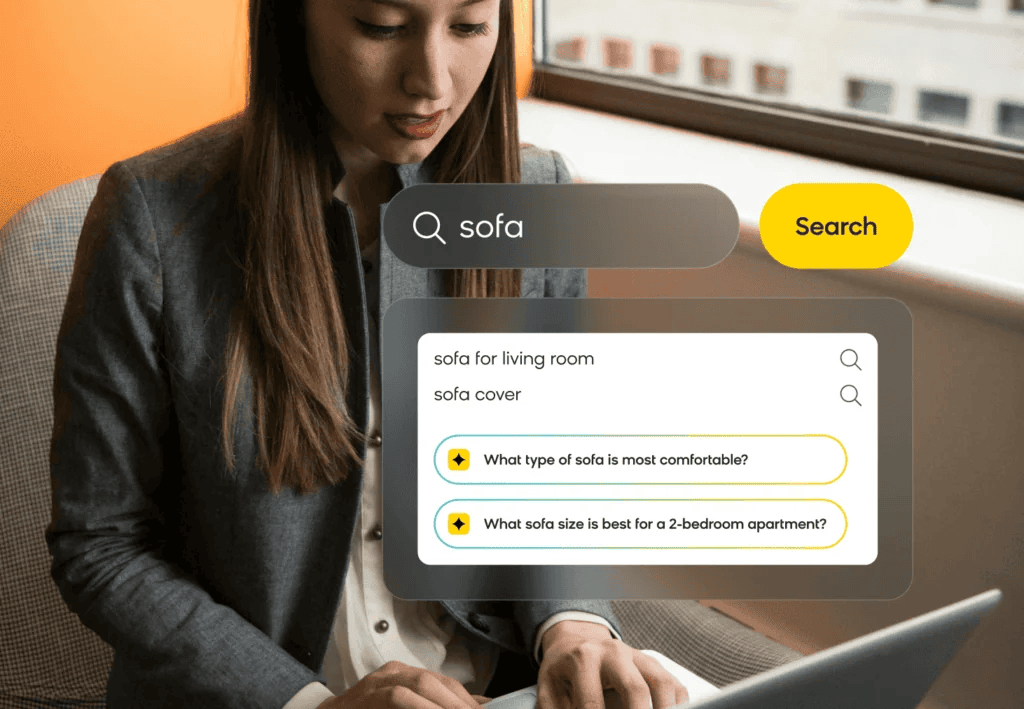

Clarity isn’t just relegated to the chat window, though. We’ve also baked in Clarity throughout the product discovery experience with thought-starter questions. So, if a shopper starts typing a query into the search bar, we’ll populate common questions related to their intended search term. Similarly, these questions will appear in other parts of the site, such as PDPs and the checkout page.

Here’s how this works. An offline process generates questions by making calls to the Gemini model and asking it to create 10 representative questions for the specified product in the catalog. However, we only want to show questions that we can actually answer.

So, for every question, we interact as if we’re a visitor asking this question. Our agent will then interact with Vertex AI in a similar fashion as if we were making a query and then generate an answer. But we still need to validate the answer, so Clarity will make another call to the LLM to ensure the answer is accurate and relevant.

As a result, even though we ask for 10 questions, we may only show a handful of them, depending on the validation between the question-answer pairs. In this way, we ensure that shoppers get a better experience free from hallucinations or inaccurate answers.

What’s Next

One of the challenges we’re running into now is the time and cost it takes to make these LLM calls to generate answers. For now, we’re mostly focused on importing the most common or high-priority queries for each site (e.g., top-ranked products). This helps us limit the scope while still meeting the needs of a large percentage of customers.

However, we’re also seeing LLMs continue to grow more sophisticated. We’re currently exploring ways to use LLMs to completely take over the orchestration flow, which means we’d be able to give a lot of context to the model and have it figure out what kind of queries to execute.

Clarity is already providing incredible conversational experiences to shoppers, and as we continue experimenting and innovating with AI, we’ll only see it improve with time. Check out Bloomreach Clarity for yourself to see how conversational shopping can transform your site.