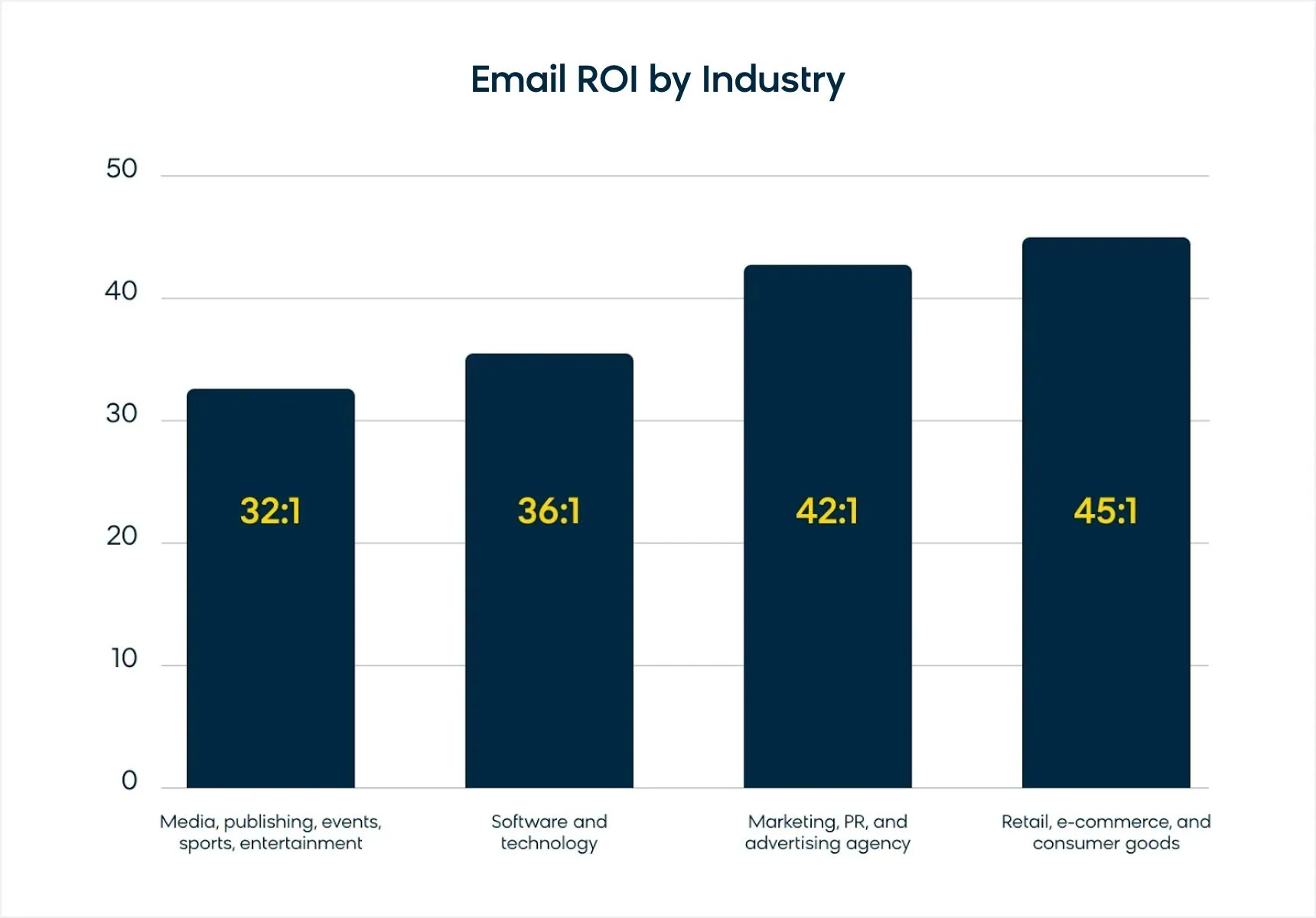

Email marketing remains the highest-ROI digital marketing channel in 2025, generating $36-42 for every dollar spent, but success depends on continuous optimization. A/B testing for email campaigns is your secret weapon to maximize performance, with strategic brands like River Island achieving 30%+ improvements in key metrics through systematic testing.

Most marketers know they should “test everything,” but where should you start? Which variables drive the biggest impact? How do you avoid the testing mistakes that waste time and skew results?

This comprehensive guide delivers 15 actionable tips, proven best practices, and real-world examples to transform your email testing strategy. You’ll learn how to design statistically sound tests, interpret results correctly, and leverage AI for personalized optimization that beats traditional A/B testing.

What Is A/B Testing in Email Marketing?

A/B testing in email marketing involves comparing two variations of a single email element to determine which performs better with your audience. This scientific approach eliminates guesswork by sending different versions to small audience segments and measuring actual performance data.

The process is straightforward: create two email variants (A and B), send each to a randomly selected subset of subscribers, measure results against your key performance indicators, then deploy the winning version to your remaining audience.

This methodology transforms email optimization from assumptions into data-driven decisions that consistently improve campaign performance.

Benefits of A/B Testing Emails

Systematic email testing delivers measurable advantages that compound over time, making it essential for competitive email marketing in 2025.

Reduce Risk While Innovating

Testing new approaches on small audience segments protects your brand reputation while enabling continuous improvement. Rather than risking your entire subscriber base on untested ideas, you can validate changes before full deployment.

This approach lets you experiment boldly with creative concepts, messaging strategies, and design elements without jeopardizing overall campaign performance.

Make Data-Driven Marketing Decisions

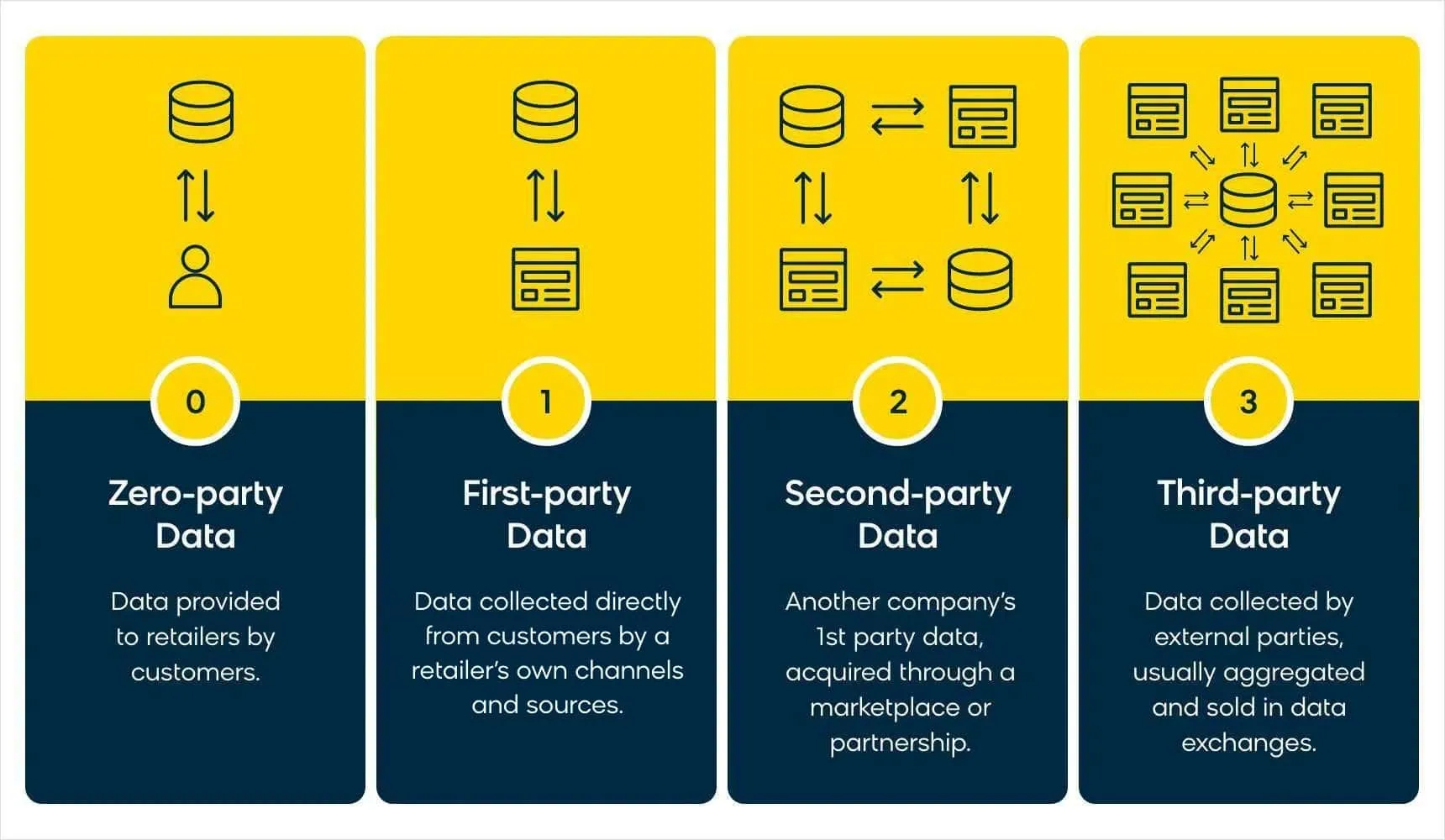

Email A/B testing generates valuable first-party data that reveals exactly how your audience responds to different approaches. This eliminates reliance on industry benchmarks or assumptions that may not apply to your specific market.

First-party data represents direct audience feedback through their actions—opens, clicks, conversions, and unsubscribes. This behavioral data provides the most reliable foundation for optimizing future campaigns.

Maximize Your Highest-Performing Channel

Email marketing continues to deliver exceptional ROI across industries, consistently outperforming other digital channels with 36:1 returns compared to SEO’s 22:1, making optimization efforts highly impactful for overall marketing performance.

With 60% of consumers preferring email communication from brands and email marketing generating substantial returns across industries, improving your email performance directly impacts bottom-line results.

Systematic testing ensures you’re maximizing this high-impact channel rather than leaving performance improvements on the table.

15 Email A/B Testing Pro Tips & Best Practices

Follow these expert strategies to design tests that deliver actionable insights and measurable improvements.

1. Test One Variable at a Time

Limit each test to a single element — subject line, CTA button, image, or copy — to clearly identify what drives performance changes. Testing multiple variables simultaneously makes it impossible to determine which change influenced results.

This focused approach provides clear, actionable insights you can confidently apply to future campaigns.

2. Develop Clear Testing Hypotheses

Before launching any test, formulate a specific prediction about how your change will impact performance. A strong hypothesis includes the change you’re making, the expected outcome, and the reasoning behind your prediction.

For example, you can hypothesize that “adding urgency language to subject lines will increase open rates by 15% because our audience responds well to time-sensitive offers.” With this clear theory driving your testing, you can confidently plan for results that yield specific insights.

3. Calculate Proper Sample Sizes

Ensure statistical validity by testing with adequate audience segments. Your sample size requirements depend on baseline conversion rates, desired confidence levels, and the minimum effect size you want to detect. For example, detecting a 20% relative improvement in a 40% open rate requires approximately 592 subscribers per variation.

Best practices dictate that email lists should contain at least 1,000 total contacts to conduct meaningful A/B tests, though specific requirements vary based on your testing parameters.

4. Set Minimum Confidence Thresholds

Only implement changes when results reach at least 95% statistical confidence. This means there’s only a 5% chance your observed difference occurred randomly rather than due to your tested variable.

Using lower confidence thresholds leads to false positives that can harm long-term performance.

5. Allow Sufficient Testing Duration

Run tests long enough to account for audience behavior patterns and external factors. Your average test duration should be 1-2 weeks, depending on email volume, to capture representative user behavior.

Avoid the temptation to stop tests early when you see promising initial results.

6. Choose the Right Success Metrics

Align your measurement approach with test objectives. Subject line tests should focus on open rates, while content tests should emphasize click-through rates and conversions.

Tracking irrelevant metrics can lead to misguided conclusions about test performance.

7. Segment Results by Audience Type

Analyze test performance across different customer segments — new vs. returning, high-value vs. occasional buyers, different demographic groups — to understand broader implications.

A test winner overall might perform poorly with specific valuable segments.

8. Document Everything Thoroughly

Maintain detailed records of test setups, hypotheses, results, and implementation decisions. This documentation helps identify patterns over time and prevents repeating unsuccessful experiments.

Create a testing calendar that tracks seasonal performance variations and audience behavior changes.

9. Test Continuously, Not Sporadically

Develop a systematic testing schedule rather than running occasional experiments. Consistent testing builds a knowledge base about your audience preferences and keeps your campaigns optimized.

Aim to have at least one A/B test running at all times across your email program.

10. Account for External Factors

Consider holidays, industry events, economic conditions, and seasonal trends that might influence test results. Document these factors to better interpret performance data.

A test conducted during Black Friday will yield different insights than the same test run in January.

11. Validate Winners Through Retesting

Confirm significant results by retesting winning variants against new alternatives or in different contexts. This helps distinguish genuine improvements from statistical flukes.

Repeat successful tests with different audience segments to verify broader applicability.

12. Focus on Meaningful Effect Sizes

Look beyond statistical significance to practical significance. A 2% improvement might be statistically valid, but it may not justify changing your entire email strategy.

Prioritize tests that can deliver meaningful business impact relative to your specific objectives and subscriber base size.

13. Test Across Multiple Email Types

Apply testing strategies to welcome series, promotional campaigns, newsletters, and transactional emails. Different email types often require different optimization approaches.

What works for promotional emails may not apply to relationship-building messages.

14. Use Progressive Testing Strategies

Start with high-impact, easily testable elements like subject lines and CTAs before moving to complex variables like email design or send timing.

This approach builds testing competency while delivering quick wins that demonstrate program value.

15. Integrate Testing With Your Broader Strategy

Align A/B testing insights with your overall email marketing strategy and customer journey optimization. Use test results to inform not just email tactics but broader marketing approaches.

Testing insights can positively influence website personalization, ad targeting, content marketing strategies, and more.

How to Interpret A/B Test Results

Proper result interpretation separates successful email marketers from those who make costly optimization mistakes.

Reading Your Test Data Correctly

Successfully interpreting A/B test results requires a two-pronged approach: assessing statistical significance and practical significance.

Statistical significance ensures your results are trustworthy. Your tests need to factor in the right parameters, confidence thresholds, and sample sizes to make sure a winning variation actually reflects audience behavior.

Practical significance ensures your results are meaningful. This adds a real-world lens to the numbers your testing yields — a subject line that improves open rates by 2% might be statistically valid, but that doesn’t mean it moves the needle on conversions, revenue, or long-term customer engagement.

Both perspectives are essential to extract real value from A/B testing and spot the insights that warrant strategic changes.

Making Data-Driven Implementation Decisions

Know when to stop testing versus continuing experiments. If you’ve reached statistical significance with adequate sample sizes, implement the winning variant confidently.

For inconclusive results, consider extending test duration, increasing sample sizes, or revisiting your original hypothesis. Avoid making changes based on insufficient data.

It’s also important to adopt a holistic view of your data. Document external factors that might influence results — seasonal trends, competitive actions, or major news events — to better understand performance context.

7 Common A/B Testing Mistakes to Avoid

These critical errors can invalidate your test results and lead to poor optimization decisions.

1. Testing Multiple Variables Simultaneously

Changing subject lines, images, and CTAs in the same test makes it hard to identify which element drove performance changes. Stick to single-variable testing for clear insights.

2. Using Insufficient Sample Sizes

Testing with inadequate audiences often fails to achieve statistical significance, leading to unreliable conclusions and wasted efforts. Lists under 50,000 total subscribers may struggle to detect meaningful differences, particularly for subtle improvements.

3. Stopping Tests Prematurely

Ending tests early when you see promising results introduces bias and reduces statistical validity. Let tests run for predetermined durations regardless of interim performance.

4. Ignoring Statistical Significance

Implementing changes based on results that haven’t reached adequate confidence levels leads to false positives that can harm long-term performance.

5. Testing Without a Clear Hypothesis

Random testing without specific predictions wastes resources and provides little strategic value. Always start with clear hypotheses about expected outcomes.

6. Overlapping Test Audiences

Running multiple tests simultaneously with overlapping audiences creates interaction effects that skew results. Run tests sequentially or use completely separate audience segments.

7. Failing to Account for External Factors

Ignoring holidays, industry events, or seasonal trends when interpreting results can lead to misguided conclusions about audience preferences.

Read This Next: Email Marketing Analytics: KPIs Deep Dive, Metrics, Goals and Reports

Email A/B Testing Ideas to Try in 2025

These high-impact testing opportunities can significantly improve your email performance across key metrics.

Subject Line Optimization

Test personalization approaches (first name vs. location), urgency language, emoji usage, and question formats versus statement formats. Subject lines directly impact open rates, making them ideal for quick testing wins.

Personalized subject lines increase open rates by 26%, providing substantial improvement potential through strategic testing.

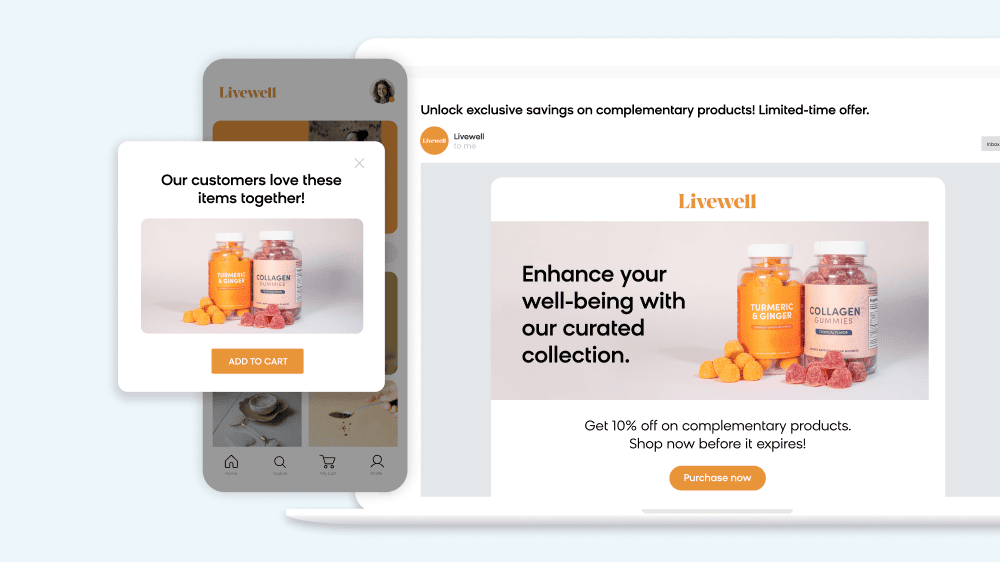

Call-to-Action Enhancement

Optimize button text (“Shop Now” vs. “Discover More”), colors, sizes, and placement within email layouts. Test single versus multiple CTAs to determine if focused messaging improves click-through rates.

Try adding urgency or value propositions directly to CTA buttons.

Content and Copy Testing

Compare long-form versus short-form content, different tone approaches (casual vs. professional), and various content structures (bullet points vs. paragraphs).

Test social proof elements like customer testimonials, reviews, and user-generated content to understand what builds trust with your audience.

Visual Element Optimization

Experiment with product images versus lifestyle photography, GIFs versus static images, and different image quantities per email.

Send Time and Frequency

Optimize email send times across different days and hours to identify peak engagement windows for your audience segments.

Test email frequency to see if your audience prefers weekly versus bi-weekly newsletters, or different cadences for promotional campaigns — 61% of consumers enjoy weekly promotional emails, which is a good cadence to start your testing.

Design and Layout Variations

Compare minimalist designs versus content-rich layouts, different color schemes, and mobile-optimized versus desktop-focused designs — 55-65% of emails are opened on mobile devices, making phone-friendly layouts a valuable factor to test.

Test header styles, footer content, and overall email structure to maximize engagement across devices.

Real A/B Testing Success Stories

These Bloomreach customer examples demonstrate the significant impact of strategic email testing.

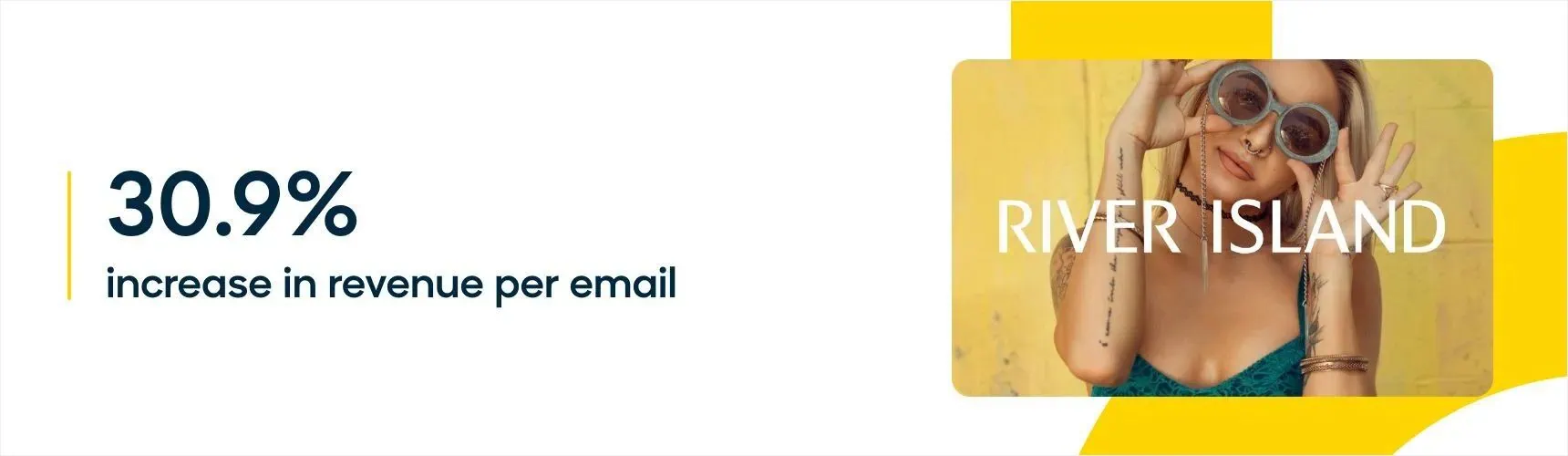

River Island: 30% Revenue Increase Through Strategic Testing

Fashion retailer River Island used systematic A/B testing to optimize their email program while reducing send frequency and improving customer experience.

Through careful testing of sending cadence, content types, and targeting strategies, River Island achieved:

- a 30.9% increase in revenue per email

- a 30.7% increase in orders per email

- a 22.5% reduction in overall send volume

This demonstrates how testing can simultaneously improve performance and enhance customer experience.

Whisker: 107% Conversion Lift Through Journey Testing

Whisker, creator of automated pet care products, tested consistent messaging across customer touchpoints to optimize their entire customer journey.

By testing personalized messaging that carried through from email content to the website experience, Whisker achieved:

- a 107% conversion rate increase

- a 112% revenue increase per user

- Significantly improved customer journey consistency

These results show how testing can extend beyond individual emails to optimize entire customer experiences.

How AI Powers Better Email A/B Testing

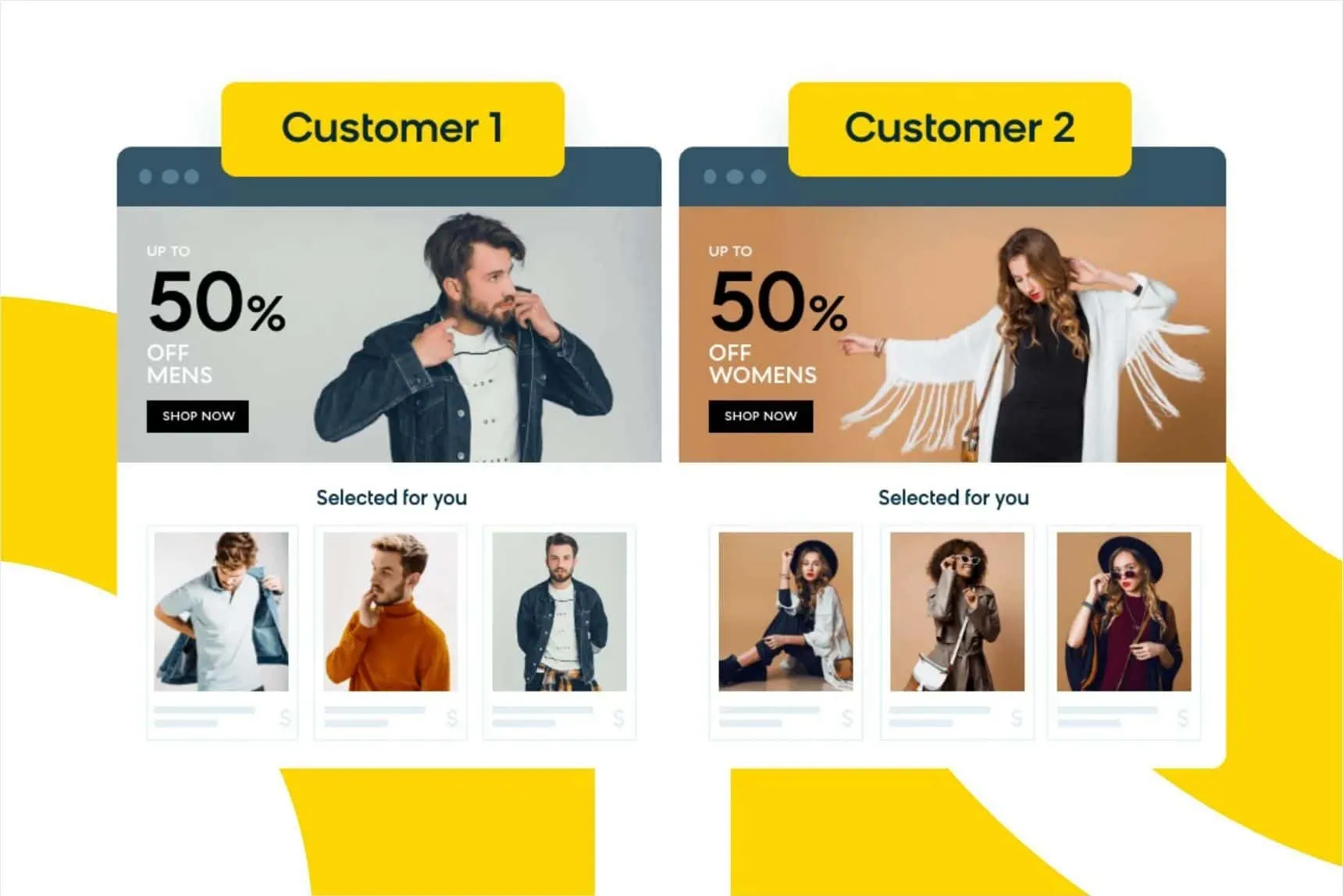

The one drawback of traditional A/B testing is that it identifies winners for the majority. But with AI-powered optimization, you can test and personalize experiences for individual recipients — ensuring that the right variant always reaches the right recipient.

Beyond One-Size-Fits-All Testing

Standard A/B testing creates binary outcomes — variant A wins or variant B wins for everyone. But this approach ignores individual preferences that could improve results for specific audience segments.

For example, if you test discount codes versus free shipping offers and discount codes win 70 – 30, traditional testing would send discount codes to everyone. But 30% of your audience clearly prefers free shipping.

AI-Powered Contextual Personalization

Contextual personalization is the AI-powered fix to this age-old issue. It uses machine learning to analyze individual customer context — purchase history, email engagement patterns, website behavior — and automatically select the optimal variant for each recipient.

This approach transforms the testing question from “which variant performs best overall?” to “which variant performs best for each individual customer?”

AI systems can process thousands of data points per customer to make these personalization decisions automatically, delivering higher performance than blanket A/B test winners.

Read This Next: What Is Contextual Personalization?

Essential A/B Testing Tools & Resources

The right tools streamline your testing process and ensure reliable, actionable results.

Statistical Significance Calculators

Use an A/B test significance calculator before launching tests to determine minimum audience requirements for reliable results. These tools factor in baseline conversion rates, desired confidence levels, and minimum detectable effects.

Advanced Testing Platforms

Modern email platforms offer integrated A/B testing with automated sample selection, variant distribution, and real-time results tracking. Look for platforms that provide:

- Automatic winner selection based on statistical significance

- Multivariate testing capabilities

- Integration with marketing automation workflows

- Detailed performance analytics and reporting

Bloomreach Testing Capabilities

Bloomreach Engagement offers enterprise-grade A/B testing capabilities with AI-powered optimization that goes beyond traditional testing approaches.

Our platform automatically calculates sample sizes, manages test distribution, and provides clear performance metrics with statistical significance indicators. Plus, with AI powering all your efforts, you can contextually personalize your tests to optimize for individual recipients rather than audience averages.

With seamless integration across email, SMS, web, and mobile channels, Bloomreach enables comprehensive testing strategies that optimize entire customer experiences.

Start A/B Testing With Bloomreach

Transform your email marketing performance with comprehensive A/B testing capabilities and AI-powered optimization that delivers personalized experiences at scale.

Bloomreach Engagement combines advanced testing tools with omnichannel orchestration, helping you build data-driven campaigns that consistently improve results.

Our platform integrates customer data, automation, AI, and analytics to support sophisticated testing strategies while maintaining the simplicity needed for day-to-day optimization.

Ready to implement systematic A/B testing that drives measurable results? Discover how Bloomreach can accelerate your email optimization and turn “test everything” from aspiration into reality.